If you are living in the EU you might already have been confronted with the GDPR requirement to have a concept that guarantees all User Sensitive Information gets deleted from systems after a certain time.

Based on this requirement some customers require Tenable Core Appliances to forward all logs to a central Syslog server and make sure local logs get deleted after a specified timeframe.

Starting Point References:

- https://gdpr-info.eu/art-17-gdpr/

- https://community.tenable.com/s/article/How-to-send-Tenable-sc-logs-to-a-syslog-server

- https://community.tenable.com/s/article/An-overview-of-Tenable-sc-application-logs

- https://www.casesup.com/category/knowledgebase/howtos/how-to-forward-specific-log-file-to-a-remote-syslog-server

Update June 22nd 2021: I verified that rsyslog needs to be run initially once time with SELinux set to Persmissive, after this initial start it will function with SELinux enabled.

Todo – Missing: SC Application Logs

This post is currently missing a solution for automatic deletion of the main SC Application Log (e.g. /opt/sc/admin/logs/202105.log) as they get rotated by the application itself on a monthly basis and thus not rotated by logrotate and is not truncated/deleted/cut by logrotate!

A simple solution might be bash script deleting these logs based on mtime run by a cronjob regularly. If i need to write a simple script for a customer i will post this here as well in the future.

Log forwarding of this main SC Application log however is working by default as described further down.

Making sure local logs get rotated and deleted

This can be done using logrotate which is included in the tenable core Appliance by default and comes with a predefined config file in:

/etc/logrotate.d/SecurityCenter

You might want create a backup of this logrotate config file and change a couple of things

- By default this config file lets logrotate only /opt/sc/admin/logs/sc-error.log – you can change this to /opt/sc/admin/logs/* to catch all logfiles in the directory (except the Main SC Application Log which gets rotated by the application itself and thus never grows longer than a month)

- You might want to look at the list of all SC Logs described in the Starting References links to see if you are missing any logfiles in the logrotate config that require rotating and deletion after a certain amount of time

Look at the defined sections in the config file, eg:

/opt/sc/admin/logs/sc-error.log {

monthly

notifempty

missingok

dateext

rotate 5

compress

}

This will rotate the log monthly and keep 5 rotations – thus deleting old logs after 6 months. If your concept requires you to delete in different intervals edit the sections accordingly.

If you need to test and troubleshoot this you can exchange monthly with daily to see it rotating during a week and testing it until you set it back to monthly again – or keep it at daily and rotate 14 for example to keep 14 days of logs.

Forwarding SC Main Application log to a central syslog server using rsyslog

The tenable core appliance comes with rsyslog preinstalled and tenable has a good starting reference for syslog forwarding linked in the beginning of this post.

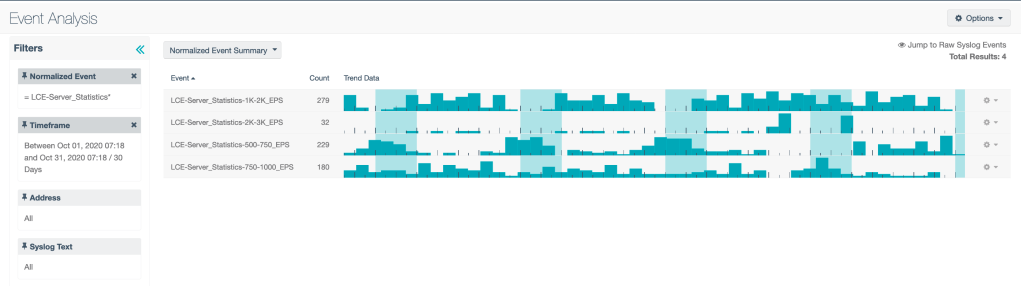

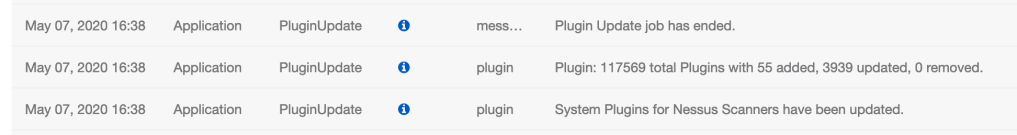

If you implement this you will see some error messages in the rsyslog logfile but it will still work and forward the main SC Application log via the LOG_USER facility.

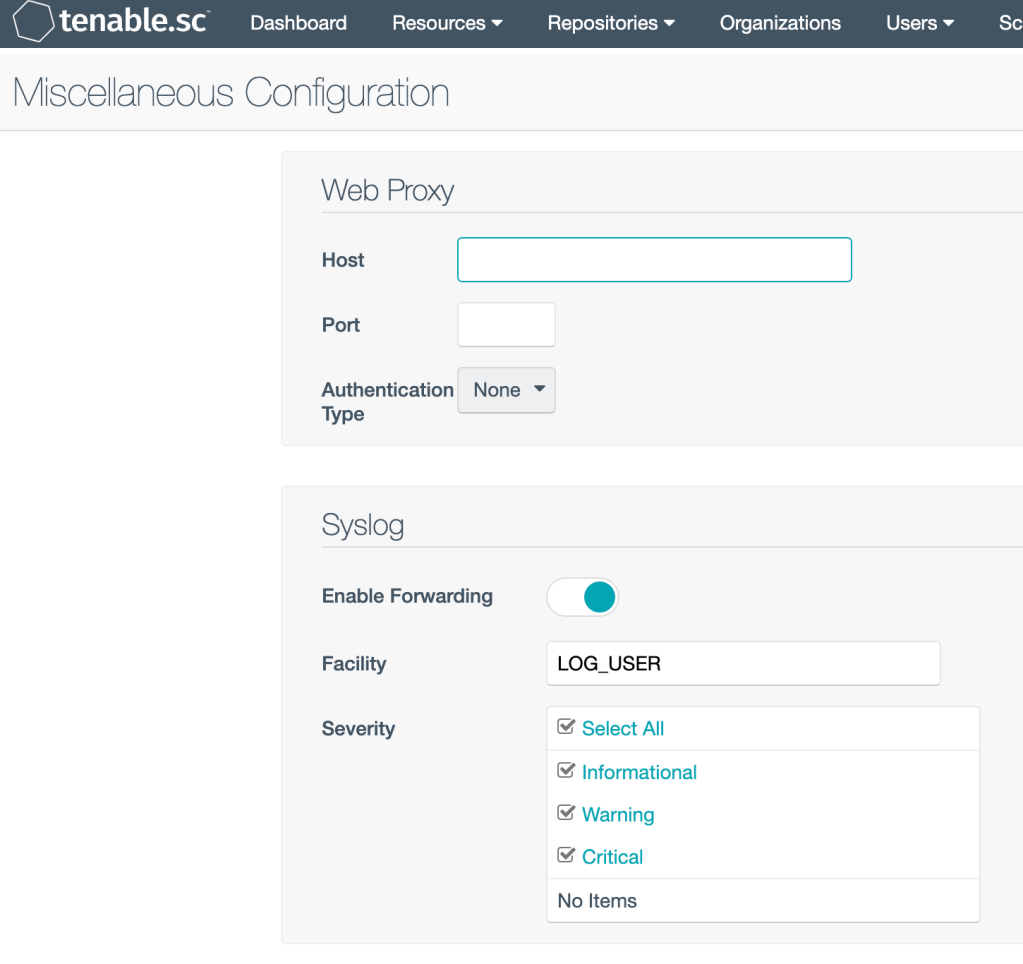

Attention: Don’t forget to activate SC Application logging in SC Admin Panel – Misc:

To troubleshoot this just run a local ncat / nc tcp or udp port listener and point rsyslog to your machine (if accessible) and read the forwarded logs in cleartext:

UDP Listener: ncat -lup 514 nc -nlvpu 514 TCP Listener: ncat -ltp 514 nc -nlvp 514

These ncat or nc commands will open a UDP (or TCP) listener on Port 514 where UDP matches the tenable Community KB article forwarding line (one @ meaning UDP):

*.* @IPaddress:514

If you see Permission Error Logs in the rsyslog logfile – these can be traced to SELinux. I have found that forwarding of the LOG_USER syslog facility (the main SC Application Logs) still work though.

Encryption of logs via syslog is not part of this post but can be looked up by searching for rsyslog encryption in the search engine of your choice.

Forwarding other logfiles on the core appliance to a central syslog server using rsyslog

If you want or need to go all in you can tell rsyslog to read local logfiles and send their content to the syslog server.

In the beginning references of this blogposts I linked to an article that I used to test this.

Basically you have to create a new config file for rsyslog under /etc/rsyslog.d/ by an arbitrary name (accesslog.conf in this example) and give it the details which log to parse and to which Syslog Facility it should be forwarded to:

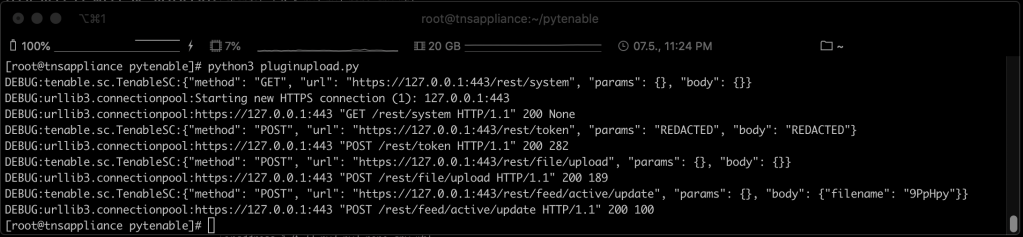

[root@tenable rsyslog.d]# cat /etc/rsyslog.d/accesslog.conf $ModLoad imfile $InputFilePollInterval 10 $PrivDropToGroup adm $InputFileName /opt/sc/support/logs/access_log $InputFileTag APP $InputFileStateFile Stat-APP $InputFileSeverity app $InputFileFacility LOG_USER $InputRunFileMonitor $InputFilePersistStateInterval 1000

You can give it any Tag you like – You maybe want to consult your SIEM Admin regarding specifics for Tagging.

If you see Permission Error Logs in the rsyslog logfile – these can be traced to SELinux. I have found that forwarding of the access_log logfile still works in my lab setup though. SELinux troubleshooting is not part of this blogpost but can be started with:

getenforce

setenforce

Attention: do not permanently disable SELinux on a hardened Appliance if you are not certain what you are doing.

Update June 22nd 2021: I verified that rsyslog needs to be run initially once time with SELinux set to Persmissive, after this initial start it will function with SELinux enabled.

EOL

This might help you getting started with Log management of the tenable core appliance! I may update this post down the line when i have implemented this with a customer and learned additional information about this topic.

BR

Sebastian

You must be logged in to post a comment.